AI Innovations Enhancing Modern Infrastructure Management Systems

Outline:

– Introduction: Why automation, predictive analytics, and IoT matter for modern infrastructure

– Section 1: Automation’s role in reliable, repeatable, and safe operations

– Section 2: Predictive analytics for asset health, forecasting, and planning

– Section 3: IoT as the sensor mesh that makes physical assets legible to software

– Section 4: Integrating the stack—architectures, data flows, and security

– Section 5: Conclusion and a pragmatic roadmap for infrastructure leaders

Introduction

Infrastructure has always been a dance between physics and logistics: concrete meets code, steel meets schedules, and budgets spar with uncertainty. What’s changed is the fidelity and speed of feedback. Machines now speak through sensors, data flows in real time, and software can orchestrate complex responses that once demanded long meetings and longer maintenance windows. Against a backdrop of aging assets, climate volatility, and talent shortages, three capabilities are redefining what “good” looks like: automation, predictive analytics, and the Internet of Things. Together they create a loop—sense, anticipate, act—that lowers risk while squeezing more performance from existing investments. This article explores the interplay between these capabilities with practical examples, clear trade-offs, and patterns you can adapt without ripping and replacing your entire stack.

Automation: The Quiet Engine of Reliable Infrastructure Operations

Automation in infrastructure management is less about flashy robots and more about dependable, repeatable actions executed at machine speed. Think of it as a careful conductor ensuring every task—provisioning, patching, scaling, failover—is performed the same way every time, with change controls baked in. The core value shows up in reduced variance: fewer configuration drifts, predictable deployments, and faster recovery when incidents occur. For teams managing utilities, transit systems, logistics hubs, or data facilities, consistent execution is often the difference between a minor hiccup and a headline.

Where automation delivers outsized value:

– Routine yet high-stakes tasks: patch cycles, certificate renewals, backups, and configuration baselines.

– Event-driven remediation: auto-isolating failing nodes, rerouting traffic, or spinning up capacity when thresholds are breached.

– Change management: pre-deployment checks, canary releases, staged rollouts, and automatic rollback on error signals.

Practically, this is implemented through declarative definitions of desired state, workflow engines that enforce policy, and guardrails that prevent risky actions outside maintenance windows or without approvals. A municipal water utility can, for example, automate pump scheduling against dynamic electricity tariffs while honoring reservoir constraints. A transport network can auto-rotate logs, validate signaling configurations, and run synthetic tests after updates. By coordinating these processes, operators see tighter control of mean time to recovery, fewer change-induced incidents, and clearer audit trails.

However, over-automation is a real risk when teams automate processes they do not fully understand. If a flawed procedure is encoded in scripts, the error propagates at scale. A sound approach includes: progressive rollout with kill switches; observability tied directly to automation outcomes; and periodic “chaos drills” to validate failovers. Documentation must evolve with code, and access controls should separate who can author workflows from who can execute them in production. Finally, automation should align with service-level objectives: the right action at the right time, not simply “more automation.” When thoughtfully governed, automation becomes an unobtrusive safety net and an amplifier of human judgment rather than a replacement for it.

Predictive Analytics: From Reactive Repairs to Anticipatory Care

Predictive analytics shifts maintenance and planning from the rear-view mirror to the windshield. By modeling how assets behave over time, teams can anticipate failures, optimize spares, and schedule interventions before a breakdown disrupts service. Industry studies consistently report sizable gains: lower unplanned downtime, reduced maintenance costs, and extended asset life. While exact figures vary by sector and maturity, programs that couple clean data with disciplined operations often achieve double-digit improvements in availability and cost per asset hour.

The pipeline looks like this: ingest historical work orders, sensor streams, environmental data, and usage cycles; engineer features that capture stressors (vibration spectra, temperature gradients, switch counts, harmonics, duty cycles); then train models tailored to the task. For time-to-failure, survival analysis and gradient-boosted trees are popular; for anomaly detection on streams, techniques range from statistical baselines to recurrent or temporal convolutional networks; for capacity and demand, time-series forecasting with seasonality and event regressors works well. Crucially, models should be optimized on business outcomes, not just accuracy. Cost-sensitive evaluation—precision on critical failures, recall on early warnings, lead-time utility—ensures predictions translate into better schedules and inventory decisions.

Common pitfalls include concept drift (assets age, usage patterns shift), label noise (inaccurate failure codes in work orders), and data leakage (training on signals not available at decision time). Proven mitigations are straightforward: rolling retraining windows; strict feature timelines; data-quality rules that flag stale or inconsistent telemetry; and model monitoring that tracks drift, alert fatigue, and intervention ROI. Explainability matters, too. When a model advises replacing a transformer bushing or recalibrating a chiller valve, maintenance teams want to know why. Feature-attribution summaries—elevated vibration at specific frequencies, temperature excursions beyond tolerance, or rising noise floors—build trust and guide inspection.

A practical example: a distribution grid operator fuses weather forecasts, load profiles, and partial discharge readings to predict insulator flashover risk during coastal storms. The model surfaces a rank-ordered queue of assets with expected risk and lead time. Planners pre-position crews and spares, and automation primes switching plans for rapid isolation. After the event, outcomes feed back into the model, tightening future estimates. This closed loop highlights the broader point: predictive analytics is not a crystal ball, but a disciplined way to put probabilities to work, reduce guesswork, and make limited resources count where they matter most.

IoT: The Sensor Mesh That Makes Physical Assets Legible

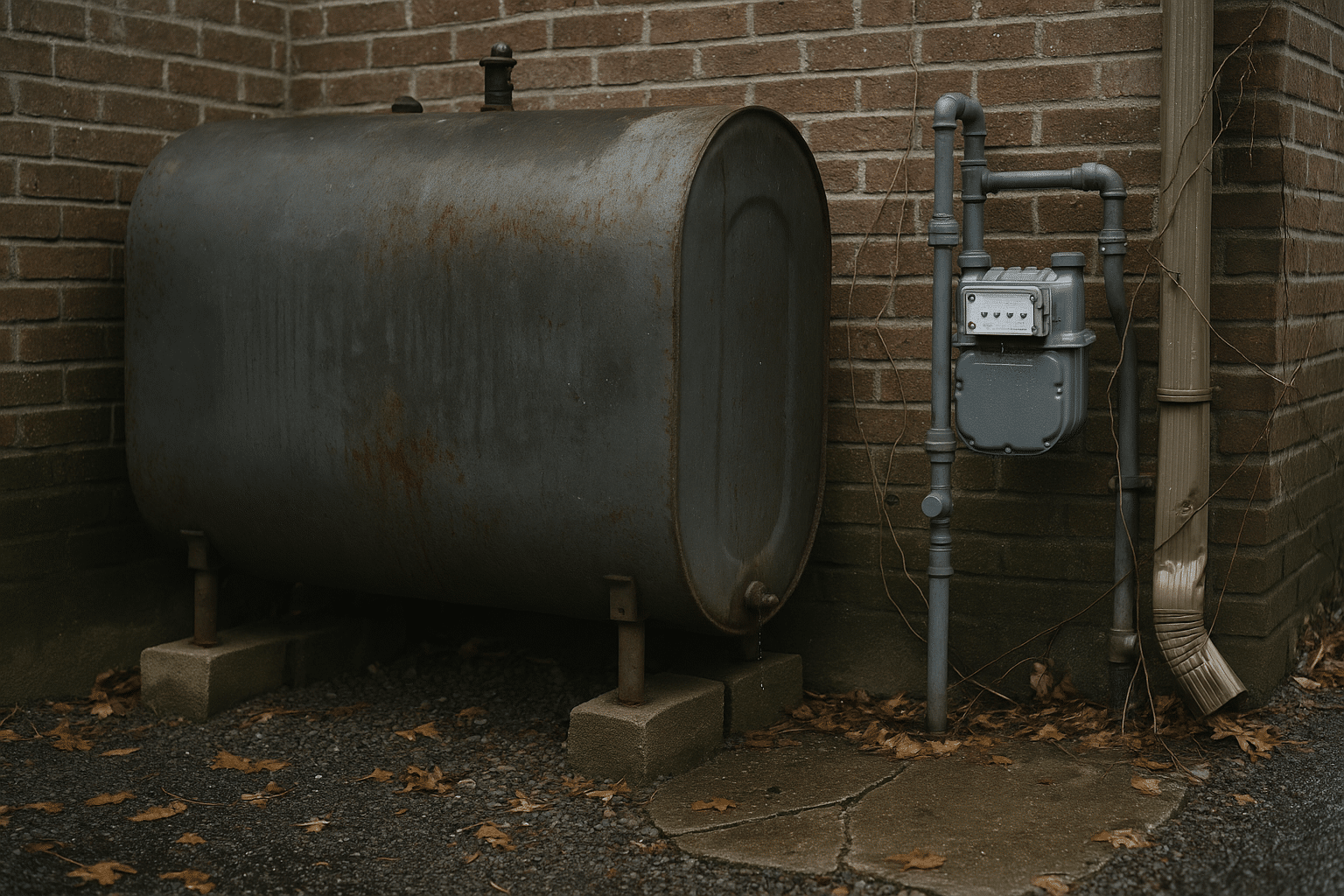

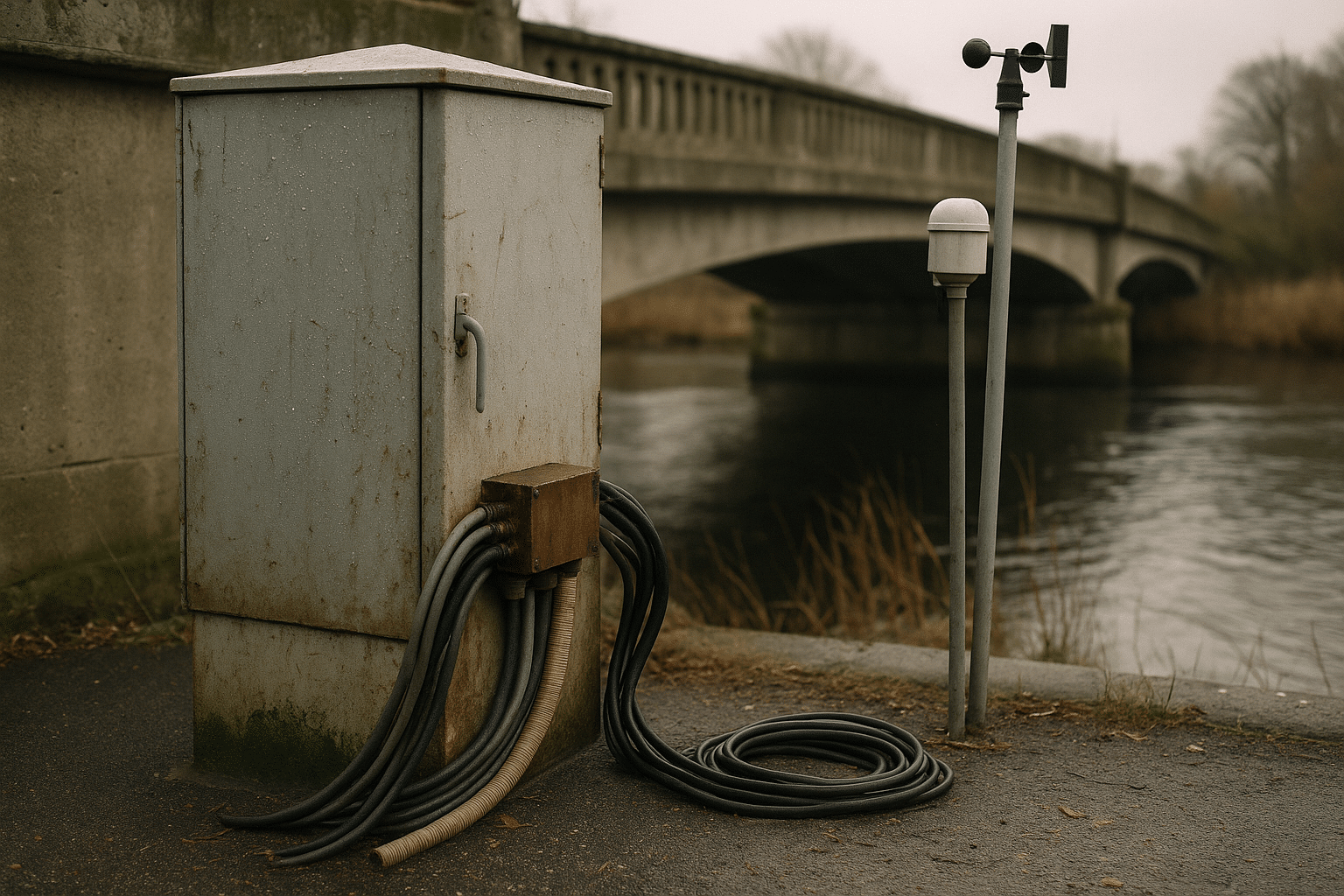

IoT is the nervous system that lets physical infrastructure speak. Sensors measure vibration, flow, pressure, temperature, voltage, emissions, and countless other signals; gateways aggregate and secure them; networks carry data to systems that decide and act. The design space is wide, and getting it right means balancing fidelity, latency, battery life, and cost. A wind farm may stream high-frequency vibration data from nacelles for bearing analysis, while a stormwater network might only need level readings every few minutes—until rainfall intensity crosses a threshold that triggers higher sampling.

Useful design choices to calibrate early:

– Protocols and bandwidth: low-power links for sparse data; broadband for rich telemetry; buffered store-and-forward for coverage gaps.

– Edge processing: local filtering, feature extraction, and anomaly flags to cut bandwidth and respond fast when links are unreliable.

– Power strategy: battery sizing, energy harvesting, sleep schedules, and duty-cycling to achieve multi-year field life without frequent truck rolls.

Interoperability can make or break IoT programs. Mixing legacy assets and new devices calls for standardized schemas and gateways that translate between industrial protocols. Digital twins—data models mirroring physical assets—help unify telemetry, maintenance history, and configuration in one place, enabling consistent queries and rules. Security is non-negotiable: unique device identities, certificate-based authentication, encrypted transport, and signed firmware updates form the baseline. Network segmentation limits blast radius, and continuous device posture checks catch tampering or outdated software.

Consider a smart roadway. Embedded strain gauges flag micro-fractures before they widen, roadside nodes monitor air quality, weather stations feed hyperlocal forecasts, and cameras (processed at the edge for privacy) quantify congestion. When rainfall spikes, culvert sensors step up sampling, and pumps initiate based on both level and upstream flow predictions. Data that’s useful for seconds is handled at the edge; data useful for months flows to long-term stores for planning and model training. Over time, the roadway “learns”: resurfacing schedules align with actual wear patterns, storm responses are pre-computed, and construction detours are timed against predicted traffic elasticity. The result is not gadgets for their own sake, but infrastructure that senses its own condition and communicates it clearly to the systems and people that care.

Architecting the Stack: Marrying Automation, Predictive Models, and IoT at Scale

An integrated infrastructure stack follows a simple flow—sense, decide, act—but the engineering details determine resilience and cost. Start with a data plane that can handle both streams and batches: gateways push sensor data into a messaging layer; stream processors handle filtering, feature extraction, and alerting; batch pipelines land curated datasets for analytics and training. A combined lake and warehouse approach supports cheap storage plus governed, query-ready tables. Above this, a model-serving layer exposes predictions as APIs or event topics with versioning, canarying, and rollback. Finally, an automation plane listens to events and executes runbooks under policy controls.

Security and reliability are woven throughout:

– Zero-trust principles for device, service, and operator access; mutual authentication everywhere.

– Least-privilege roles for automation systems; human-in-the-loop approvals for high-impact changes.

– Segmented networks and per-tenant namespaces to isolate workloads; defense-in-depth for gateways at the edge.

Edge design is often decisive. During connectivity loss, assets still need to operate safely. Local controllers should enforce minimal viable behavior—safe shutdowns, rate limiting, or autonomous failover. When links return, state must reconcile without double-applying actions. Use idempotent commands, event logs with sequence numbers, and conflict resolution rules baked into automations. Observability is another pillar: metrics, traces, and logs aligned to business services, not just nodes. Tie alerts to service-level objectives, and annotate telemetry with deployment metadata for rapid incident triage.

Data governance keeps the machine honest. Define ownership for each dataset; track lineage from device to dashboard; and enforce retention aligned with regulation. Shared semantics—consistent unit conventions, health states, and asset hierarchies—are the glue that lets analytics and automation interoperate across domains (power, HVAC, transport, water). Testing should mirror production realities: simulate noisy sensors, partial outages, and clock skew; replay historical incidents to validate end-to-end responses. When this architecture is in place, a storm rolling through a region becomes a managed workflow: sensors escalate sampling, models update risk maps, automations pre-stage capacity, and operators oversee the playbook with clarity rather than scrambling through ad hoc fixes.

Secure, Governed, and Practical: A Roadmap and Conclusion for Infrastructure Leaders

Modernizing infrastructure management does not require a moonshot. It benefits from steady steps that compound quickly when aligned to outcomes. A pragmatic 90–180 day plan can deliver meaningful wins while laying foundations for scale. Start by mapping critical services and their failure modes; identify the top pain points—unplanned downtime, slow incident response, opaque asset health—and pick pilots that directly address them. Use simple measures of success, such as reduction in mean time to recovery, fewer change-related incidents, improved forecast error, or lower truck rolls per month.

Suggested sequence:

– Weeks 1–4: Establish data quality baselines, device inventories, and access controls. Stand up a lightweight messaging layer and a governed landing zone for sensor data. Define service-level objectives that tie reliability to business value.

– Weeks 5–10: Implement one event-driven automation with tight guardrails (for example, safe failover of a non-critical subsystem). Build a small predictive model addressing a tangible pain point, such as early detection of a common failure mode. Close the loop with a dashboard that links predictions to recommended actions.

– Weeks 11–18: Expand edge processing where bandwidth is costly or latency matters. Introduce model monitoring and retraining cadence. Scale automations to include approval workflows and staged rollouts. Document runbooks and train operations staff on new procedures.

Throughout, maintain a bias toward explainability and safety. Favor models whose drivers can be communicated clearly to technicians; require automations to log intent, evidence, and outcomes; and keep humans in the loop for irreversible actions. Build cross-functional squads that include operations, reliability engineering, cybersecurity, and procurement, so trade-offs are surfaced early. Finally, don’t neglect storytelling: short post-incident reviews and monthly “show the work” demos build trust and momentum.

Conclusion: Automation gives you repeatability, predictive analytics gives you foresight, and IoT gives you eyes and ears in the field. Woven together, they form a practical operating system for roads, grids, facilities, and campuses. The payoff is fewer surprises, faster recoveries, and a clearer line of sight from data to decision to action. For leaders stewarding critical infrastructure, the path forward is not to chase hype, but to build a loop that senses what matters, anticipates what’s next, and acts with discipline. Begin small, prove value, and let results pull the next investment—not the other way around.